Form

![]()

Use the form node to collect information and identify intents. The form node is accessible only to voice channels.

Watch the How-To Video

Name

Give the node a name.

Form

Fill in the Form tab, as follows.

-

Select the form type.

Form Types

Description

Built-in form types suit most use cases.

Custom form types suit use cases where the return values match DTMF or grammar values.

Open form types suit use cases where return values are picked from free-form conversational dialog.

-

Fill in the fields specific to the form type.

-

Fill in the Return Values.

Built-in Form Types

Built-in form types suit most use cases. When you finish filling in the fields specific to the form type, remember to fill in the Return Values.

Studio uses Lumenvox as the Automatic Speech Recognition (ASR) provider for built-in form types.

|

Built-in Form Types |

Description |

|---|---|

|

Yes No |

Use for prompts that require a yes or no response. The speech-to-text return value is yes or no in all lowercase letters. |

|

Digit String |

Use for prompts where the required response is a sequence of digits. The speech-to-text return value contains digits only and can be of any length. An example response is 12345. |

|

Integer |

Use for prompts where the required response is a single digit. An example response is 7. |

|

Custom Digit String |

Use for prompts where the required response is a sequence of digits. You set the minimum and maximum lengths. For example, when prompting for a five digit ZIP code, set the minimum number of digits to 5 and the maximum number of digits to 5. |

|

Scale |

Use for prompts where the required response is a numeric rating. This is an example prompt. On a scale of 1 to 5, how likely... |

|

Alphanumeric |

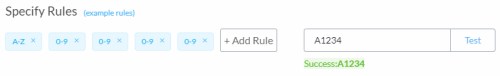

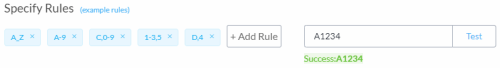

Use for prompts where the required response is a sequence of alphanumeric characters. Add rules and Studio expects the return value to have the same number and type of characters as the rule. Return value A1234 adheres to the rule of one alphabetic character followed by four numeric characters. Return value A1234 also adheres to the following rule. The first character can only be A, B, C, D, or F. It is followed by four numeric characters. Return value A1234 adheres to the following rule. The second character can be any alphanumeric character. The fourth character must be 1, 2, 3, or 5. The last character can only be D or 4. |

|

Custom Alphanumeric String |

Use for prompts where the required response is a sequence of alphanumeric characters and the expected number of characters can vary. Use cases for this include United Kingdom postcodes and flight numbers. You set the minimum and maximum lengths of the character sequence. |

|

Payment - Credit Card Number |

Returns the speech-to-text value of the credit card to the payment variable payment.CARD_NUMBER. Returns the speech-to-text value of last four digits of the credit card to the payment variable payment.CARD_LAST_FOUR_DIGITS. Returns the speech-to-text value of the credit card type to the payment variable payment.CARD_TYPE. Optionally, you can assign a variable to Credit Card Number. The text-to-speech return value masks all but the first six and last four digits of the credit card number. Optionally, you can assign a variable to Credit Card Number Check. The text-to-speech return value is valid or invalid in all lowercase letters. It performs a Luhn algorithm check on the credit card. Optionally, you can assign a variable to Credit Card Type. |

|

Payment - Credit Card Expiry |

Returns the speech-to-text value of the payment credit card expiry in MMYY format to the payment variable payment.CARD_EXPIRY. An example value is 0517. |

|

Payment - Credit Card CVC |

Returns the speech-to-text value of the payment credit card CVC to the payment variable payment.CARD_CVC. |

Custom Form Types

Custom form types suit use cases where the return values match DTMF or grammar values.

-

Create a custom form type to define the DTMF or grammar values if not already done. See Custom Forms.

-

Within the Form node:

-

Select the custom form type.

-

Select an Automatic Speech Recognition (ASR) provider.

Dependent on your Studio business plan, Google or Lumenvox may be available.

-

Select the language.

Refer to the languages supported by Google.

Lumenvox supports the following languages.

-

English (United States)

-

English (Australia)

-

English (UK)

-

Spanish (Mexico)

-

Spanish (South America)

-

Portuguese (Brazil)

-

French (Canada)

-

Italian

-

German

-

-

Fill in the Return Values.

-

If the return value matches many grammar values, as is the case in the sound of names John and Jon, Studio captures the first match. You may want to verify this is the best match. Studio stores all matched grammars in the confusables array. See Custom Form Confusables.

Open Form Types

Open form types suit use cases where return values are picked from free-form conversational dialog.

Use the built-in open form types or create your own. To create your own, see Open Forms. The built-in open form types are as follows.

|

Built-in Open Form Types |

Data Captured |

NLP Engine |

Languages Supported |

|---|---|---|---|

|

System Currency |

Use to capture currency values. Examples follow.

If the unit of currency is not spoken, USD is returned. |

Google Cloud Dialogflow Uses system entity @sys.unit-currency. |

English |

|

System Date |

Use to capture the date spoken in a conversational way. Examples follow.

|

Google Cloud Dialogflow Uses system entity @sys.date. |

English, Spanish, German |

|

System Calendar |

Use to capture the date, time, and duration of a meeting as you would naturally say when making a calendar appointment booking. Examples follow.

|

Google Cloud Dialogflow Uses system entities @sys.date, @sys.time, and @sys.duration. |

English |

|

System YesNo |

Use to capture a yes or no response spoken in a conversational way. Examples follow.

|

Google Cloud Dialogflow Uses an entity managed by Five9. |

English, German |

Follow these steps when using open form types.

-

Select the open form type.

-

Select the language.

Refer to the languages supported by Google. When using Amazon Lex V2, select the language the bot supports.

-

Fill in the Return Values.

You can override the selected language dynamically at call time to support multiple languages from a single form node. To do this, select a language variable from the NLP Settings tab. This feature is supported with open form types, and custom form types when Google is the selected ASR.

Return Values

From the Form tab, select the form type, fill in the fields specific to the form type, and fill in the Return Values.

|

Field |

Description |

|---|---|

|

Value |

Returned data. |

|

Assign the value to variable |

Select a variable to assign to the returned data. If the variable has not been created yet, type the name of the variable in the field. |

|

Default value |

Assign a default value to the returned data. The default value itself can be a variable. Type two curly brackets {{ and select from the available options. |

For an open form type with Google Cloud Dialogflow, if the returned data (the intent) does not contain the parameter or the parameter path is NULL with “null” value, Studio assigns the default value to the variable. If the intent contains the parameter path and the value an empty string, Studio assigns an empty string value to the variable.

Return Values from the Built-in Open Form Types

System Currency

Use to capture currency values. If the unit of currency is not spoken, USD is returned.

|

Return Values |

Description |

|---|---|

|

Currency |

Returns the captured amount and unit of currency. An example is 40 USD. |

|

Amount |

Returns the captured amount of currency. An example is 40. |

|

Unit-Currency |

Returns the captured currency string in ISO 4217 format. An example is USD. |

System Date

Use to capture the date spoken in a conversational way.

|

Return Values |

Description |

|---|---|

|

Date |

Returns the captured date in YYYY-MM-DD format. |

|

Date Formatted |

Returns the captured date and time. |

System Calendar

Use to capture the date, time, and duration of a meeting as you would naturally say when making a calendar appointment booking.

|

Return Values |

Description |

|---|---|

|

Minimum Time |

Returns the meeting start time in the following format: yyyy-mm-ddThh:mm:ss.nnn+|-hh:mm. An example return value follows: 2019-05-15T14:00:00.000+10:00. Google Calendar APIs expect this format. |

|

Maximum Time |

Returns the meeting end time in the following format: yyyy-mm-ddThh:mm:ss.nnn+|-hh:mm. An example return value follows: 2019-05-15T14:40:00.000+10:00. Google Calendar APIs expect this format. |

|

Duration |

Returns the duration of time allocated to the meeting in minutes. An example return value is 40. |

|

Date |

Returns the meeting start date in yyyy-mm-dd format. This is helpful when using the confirmation tab to confirm the date and when sending an SMS. |

|

Time |

Returns the meeting start time in hh:mm format. This is helpful when using the confirmation tab to confirm the start time and when sending an SMS. |

System YesNo

Use to capture a yes or no response spoken in a conversational way.

|

Return Values |

Description |

|---|---|

|

YesNo |

Returns either yes or no in all lowercase letters. |

Text-to-Speech

Specify each of the prompts to play to the caller.

The Add Pause, Volume, Pitch, Emphasis, Say As, and Rate menus above the TTS prompt apply SSML parameters. See Text-to-Speech Prompts.

|

Prompt Field Name |

Description |

|---|---|

|

Prompt |

The caller hears this prompt first. The prompt tells the caller what to do. |

|

Fallback Prompt |

The caller hears the fallback prompt when the system fails to hear the caller's response, or the caller's response is not understood. The fallback prompt should provide the caller with greater context on how to respond to the prompt. This is an example fallback prompt. This is a natural language system. Just say what you would like to do. For example, send me to the billing department or to speak to a representative. |

|

No Input Prompt |

The caller hears the no input prompt, followed by the fallback prompt, when the caller does not respond to the prompt. |

|

No Match Prompt |

The caller hears the no match prompt, followed by the fallback prompt, when the caller's response to the prompt is not understood. Studio uses natural language so no match indicates a high transcription confidence score. |

Phrase Hints

The Phrase Hints tab is applicable to open form types when the selected ASR is Google.

Use phrase hints to improve speech recognition accuracy. Enter phrase hints in the Phrase Hints field or in a datastore or both. If you use a datastore, use boost values to prioritize one word over another. For more about the data store structure, see Phrase Hints Datastore.

|

Field |

Description |

|---|---|

|

Phrase Hints |

Enter a comma-separated list of keywords to improve speech recognition accuracy. For example, if the caller's response is likely to include a month of the year, include a comma-separated list of months in the phrase hints. The list can include variables, which enable the phrase hints to change dynamically according to the variable values. |

| Choose Datastore for Phrase Hints | Select the datastore that stores the phrase hints. |

| Choose Datastore Column for Phrase Hints | Select the column in the datastore with the list of words or phrases. |

| Datastore Boost Column |

Select the column in the datastore with the boost values. To select the column dynamically at call time, if the datastore has multiple boost columns, select a variable to store the name of the column. |

Speech Recognizer

|

Field |

Description |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

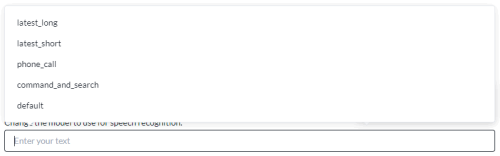

Speech recognition model |

Available for open type forms once the language is selected on the Form tab. To list the models available, clear the field, and then click in the field. Select from the available options. Start typing to reduce the list of models to those that match your typed text. Note:

Studio does not validate the model. Use the letter case on display. If the provider fails to recognize the model, the form node fails with an event recorded in the system log. The event is CALLER_RESPONSE where param1 is the current node, param2 is the next node, and param3 is the recognizer error. See also System Log Events. Studio supports these Google Cloud Dialogflow voice models.

|

||||||||||||

|

Assign transcribed text to variable |

Select the variable to store the caller's transcribed responses. If the variable has not been created yet, type the name of the variable. |

||||||||||||

|

Assign confidence score to variable |

Select the variable to store the confidence score associated with the transcribed text. If the variable has not been created yet, type the name of the variable. Use of this variable is optional. You can use it to ignore transcriptions with a low confidence. The confidence score is a numeric value between 0 and 1: 0 meaning no confidence and 1 meaning complete confidence. An example confidence score is 0.45. |

||||||||||||

|

Assign recording file to audio variable |

Select the variable to store the caller's raw verbatim audio as an audio file. If the variable has not been created yet, type the name of the variable. |

||||||||||||

|

Speech Controls |

Select the box to enable Barge In. Barge in enables the caller to interrupt the system and progress to the next prompt. It enables experienced callers to move rapidly through the system to get to the information that they want. You may want to disable barge in at key times, such as when your prompts or menu systems change. Note:

For open form types using Google Cloud Dialogflow, if you enable Barge in, the prompt time should not exceed 60 seconds. If Studio detects non-speech energy, the call routes to the no-match event handler after 60 seconds. |

||||||||||||

|

Minimum Transcription Confidence Score |

When the Minimum Transcription Confidence Score is higher than the confidence score, the call is directed to the No Match Event Handler. The confidence score is a representation of the system's confidence when interpreting caller input. The confidence score is a numeric value between 0 and 1: 0 meaning no confidence and 1 meaning complete confidence. An example confidence score is 0.45. |

||||||||||||

|

Inter Digit Timeout |

Select the time in seconds to wait between each DTMF key press. The longer the timeout, the greater the allowable time between each key press. When the timeout is reached, Studio finalizes the collected DTMF input so far and moves on to the next node in the call flow. |

Event Handler

The event handlers are as follows. Each event handler has a Count value specifying the number of attempts to run before triggering the event handler. The event handler routes the call to a task canvas. If the canvas has not been created yet, type the name of the canvas.

|

Event Handler |

Description |

|---|---|

| No Input Event Handler | Select a task canvas. The call routes to the canvas if no input is detected from the caller after multiple attempts. |

| No Match Event Handler | Select a task canvas. The call routes to the canvas if no match is detected from the caller after multiple attempts. |

NLP Settings

|

Setting |

Description |

|---|---|

|

Query Parameters |

Pass query parameters in JSON format to Google Cloud Dialogflow. This feature is supported with open form types when Google is the selected ASR. Example:

"timeZone": "America/New_York", "geoLocation": {"latitude": 12, "longitude": 85},"contexts": [{"name":"outage", "lifespanCount":5}],"resetContexts": true, "sessionEntityTypes": [{"name":"snack", "entityOverrideMode":"ENTITY_OVERRIDE_MODE_OVERRIDE", "entities":{"value": "Tea", "synonyms": ["tea", "Tea"]}}],} Learn more about query parameters with Dialogflow ES and query parameters with Dialogflow CX. |

|

Language Variable |

Select a language variable to dynamically override the ASR selected language at call time. This is useful when building multilingual voice tasks. This feature is supported with open form types, and custom form types when Google is the selected ASR. |

Advanced ASR Settings

This tab is applicable to all custom and open form types. The initial settings are those applied to the custom or open form type.

Do not tune unless you have a clear understanding of how these settings affect speech recognition. Generally speaking, the default settings are the best. To return a setting to its default value, remove the value from the field and click outside the field.

Studio supports maximum 5 minutes of caller audio when using Google Cloud Speech-to-Text. See https://cloud.google.com/speech-to-text/quotas.

|

Timeout Settings |

Description |

|---|---|

|

No Input Timeout |

Wait time, in milliseconds, from when the prompt finishes to when the system directs the call to the No Input Event Handler as it has been unable to detect the caller’s speech. |

|

Speech Complete Timeout Speech Incomplete Timeout |

Use these settings for responses with an interlude to ensure the system listens until the caller's speech is finished. Speech Complete Timeout measures wait time, in milliseconds, from when the caller stops talking to when the system initiates an end-of-speech event. It should be longer than the Inter Result Timeout to prevent overlaps. To customize Speech Complete Timeout, turn off Single Utterance. Speech Incomplete Timeout measures wait time, in milliseconds, from when incoherent background noise begins and continues uninterrupted to when the system initiates an end-of-speech event. |

|

Speech Start Timeout |

Wait time, in milliseconds, from when the prompt starts to play to when the system begins to listen for caller input. This is similar to the scenario where barge in is enabled. |

|

Inter Result Timeout |

Wait time, in milliseconds, from when the caller stops talking to when the system initiates an end-of-speech event as it has been unable to detect interim results. The typical use case would be for a caller reading out numbers. The caller might pause between the digits. It is recommended to keep the value shorter than Speech Complete Timeout to avoid overlaps. Inter Result Timeout does not reset if there is background noise. Speech Complete Timeout does reset if there is background noise. If there is background noise, Inter Result Timeout may be more reliable in determining when the speech is complete. By default, Inter Result Timeout is set to 0 and Single Utterance is turned on. To customize Inter Result Timeout, turn off Single Utterance. Set Inter Result Timeout from 500ms to 3000ms based on the maximum pause time in the caller response. When Single Utterance is turned off and the selected ASR is Google Cloud Dialogflow, Inter Result Timeout cannot be set to 0. The value of Inter Result Timeout changes from 0 to 1000ms. The Inter Result Timeout setting is not available for Amazon Lex. |

|

Barge In Sensitivity |

Raising the sensitivity requires the caller to speak louder above background noise. Applicable when Barge In is enabled. The scale is logarithmic. See Lumenvox Sensitivity Settings. |

|

Auto Punctuation |

Select to add punctuation to the caller's speech. |

|

Profanity Filter |

Select to remove profanities from the caller's speech. |

|

Single Utterance |

A single utterance is a string of things said without long pauses. A single utterance can be yes or no or a request, like Can I book an appointment?, or I need help with support. The single utterance setting is turned on by default. Turn off Single Utterance to customize Speech Complete Timeout and Inter Result Timeout. You may decide to turn off Single Utterance if the caller is expected to pause as part of the conversation. For example, the caller may read out a sequence of numbers and pause in appropriate places. The single utterance setting is not available for Amazon Lex. |

Confirmation

The confirmation tab is not available for open form types.

Use the confirmation tab to prompt the caller to confirm their response.

|

Condition |

Description |

|---|---|

|

Not Required |

The caller does not confirm their response. This is the default behavior. |

|

Required |

The caller is required to confirm their response. |

|

Speech Recognizer Confidence |

The caller is required to confirm their response if it falls bellow the speech recognizer minimum transcription confidence score. The confidence score is representative of the system's confidence when interpreting the caller's response. Raise the Minimum Transcription Confidence Score to require a higher level of accuracy from the caller. |

Configure these settings for the case where the caller confirms their response.

|

Settings |

Description |

|---|---|

|

Barge In |

Select the box to enable Barge In. Barge In enables the caller to confirm their response before the confirmation prompt has finished playing. It enables the caller to move rapidly through the call flow. The caller may miss the confirmation prompt repeating their response. |

|

Confirmation Prompt |

The caller hears the confirmation prompt. Include [user_input] to play the response the prompt is confirming. If there are multiple response values, [user_input] captures the first value. Example:

You entered: [user_input]. Say Yes or press 1 if this is correct. Otherwise say No or press 2. |

|

No Input Prompt |

The caller hears the no input prompt when the caller does not confirm. |

|

No Match Prompt |

The caller hears the no match prompt when the caller's confirmation response is not understood. |

|

Maximum Number of Attempts to Confirm |

After the maximum number of attempts to confirm the response the call flows to the No Match Event Handler. |

|

No Input Timeout |

Wait time, in milliseconds, from when the prompt finishes to when the system directs the call to the No Input Event Handler as it has been unable to detect the caller’s speech. |