Generative AI

Use the generative AI node in any of the following ways:

-

Match the caller's intent from their natural language speech.

-

Summarize a conversation between caller and virtual agent.

-

Create custom large language model (LLM) prompts within a modeled use case.

-

Match the caller's query to a database of frequently asked questions.

Name

Give the node a name.

API Key

Enter your Open API Key for the integration.

You can enter the name of a Studio variable containing the Open API Key. Type two curly brackets {{ to select from the variables in your account.

If you use a Studio variable, you cannot use Preview response. See Response.

Model

Select the ChatGPT model you are using.

Use Case

Select from the following use cases.

|

Use Case |

Description |

|---|---|

|

Match the caller's intent from their natural language speech. |

|

|

Summarize a conversation between caller and virtual agent. |

|

|

Create custom LLM prompts within a modeled use case. |

|

|

Match the caller's query to a database of frequently asked questions. |

Intent Detection

Select the Indent Detection use case to match the caller's intent from their natural language speech.

Fill in these settings from the Intents tab to the front of Configuration settings.

|

Setting |

Description |

|---|---|

|

Add Intents |

Enter one or more intents. An intent can be any word or word sequence. |

|

User Prompt |

Enter a speech-to-text representation of the caller's query. Typically, in production, capture the query on an earlier node and store the information in a variable. Type two curly brackets {{ to select from the variables in your account. For testing purposes, you could just type some text. |

|

Detect Multiple Intents |

When not selected (default), Open AI matches the query to one intent based on the confidence level for each intent. When selected, Open AI may match the query to many intents based on the Open AI algorithm and the confidence levels for each intent. |

For further settings, see Open AI Parameters and Settings.

Summarization

Select the Summarization use case to summarize a conversation between caller and virtual agent.

In the Assign summary text to variable field, assign the conversation summary to a Studio variable.

In the call flow, place this node where the conversation between caller and virtual agent is complete. If this node is called while the conversation is in progress, the remainder of the conversation will be lost.

For further settings, see the Settings tab.

Custom Prompting

Select the Custom Prompting use case to create custom LLM prompts within a modeled use case.

Fill in these settings from the Prompt tab to the front of Configuration settings.

|

Prompt |

Description |

|---|---|

|

System Context |

Provide a context to Open AI. Example:

You are a translator bot and you can translate given input to Spanish. Example:

Extract an alphanumeric string in the format of three letters followed by three numbers from the following text, returning only the alphanumeric string, please format the string with a space between each character here is the text: |

|

User Prompt |

Enter a speech-to-text representation of the caller's query. Typically, in production, capture the query on an earlier node and store the information in a variable. Type two curly brackets {{ to select from the variables in your account. For testing purposes, you could just type some text. |

For further settings, see Open AI Parameters and Settings.

Datastore FAQ

Select the Datastore FAQ use case to match the caller's query to a database of frequently asked questions.

Fill in these settings from the Datastore tab to the front of the Configuration settings.

|

Setting |

Description |

|---|---|

|

Datastore |

Select the name of the datastore. The FAQ database is in a datastore in the Studio account. |

|

Map Question Column |

Select the datastore column with the FAQ questions. |

|

Map Answer Column |

Select the datastore column with the FAQ answers. |

|

User Prompt |

Enter a speech-to-text representation of the caller's query. Typically, in production, capture the query on an earlier node and store the information in a variable. Type two curly brackets {{ to select from the variables in your account. For testing purposes, you could just type some text. |

For further settings, see Open AI Parameters and Settings.

Open AI Parameters

From Configuration, select the Open AI Parameters tab.

The Open AI Parameters tab is available for the intent detection, custom prompting, and datastore FAQ use cases.

|

Setting |

Description |

|---|---|

|

Temperature |

Temperature measures how often the model outputs a less likely token. The higher the temperature, the more random (and usually creative) the output. The temperature ranges between 0 and 1. As the temperature approaches zero, the model becomes deterministic and repetitive. |

|

Max Tokens |

Max tokens measures the maximum number of tokens to generate. Requests can use up to 2,048 or 4,000 tokens shared between prompt and completion. The exact limit varies by model. One token is roughly four characters for standard English text. Ideally you won’t hit this limit often, as your model will stop either when it thinks it’s finished, or when it hits a stop sequence you have defined. |

|

Top P |

Top P sampling (or nucleus sampling) is an alternative to sampling with temperature. The model considers the results of the tokens with top_p probability mass. When Top P is 0.1, only tokens in the top 10% probability mass are considered. |

|

Presence Penalty |

Presence penalty penalizes new tokens that have already appeared frequently in the text. A higher value decreases the model's likelihood to repeat the same line verbatim. |

|

Frequency Penalty |

Frequency penalty penalizes new tokens that have already appeared in the text. A higher value increases the model's likelihood to talk about new topics. |

|

Stop Sequences |

A stop sequence is a set of characters (tokens). On generating a stop sequence, the API stops generating further tokens. Enter up to four stop sequences. |

Refer to the API Reference for more information on these settings.

Settings

From Configuration, select the Settings tab.

The Settings tab is available for all use cases.

|

Setting |

Description |

|---|---|

|

Fetch Timeout |

The maximum time in seconds that Studio waits to retrieve data from the API request. |

Response

-

From the Response section, click Preview Response.

Response JSON name-value pairs display.

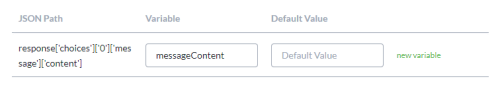

The JSON name-value pair most commonly of interest is captured in the Studio variable, messageContent. Scroll down to the table of return values to see this. You can use the fields in this table to assign a different variable to the JSON name-value pair and a default value to the Studio variable.

Detailed Response

Learn about the response section of the user interface in detail.

Match JSON name-value pairs of interest.

-

Click Preview Response to see the JSON name-value pairs.

Alternatively, to enter JSON directly, click the pencil icon. The pencil turns into a tick. Using the simple editor, ensure your code is valid. Click the tick icon to return to preview mode to continue with these steps.

-

Select a JSON name-value pair.

The path to the selected name-value pair is displayed in the JSON Path field.

You may need to switch between Data and Meta to locate a specific name-value pair.

-

To edit the JSON path, select Editable JSON.

Following are some ways to use the editable JSON facility. For more information, see Editable JSON.

Example

Description

response['0']['email'] The path refers to the first email record. response['*']['email'] Replace the number zero with an asterisk to refer to all the email records. response['{{index}}']['email'] Replace the number zero with a variable to iterate over all the email records. -

Give a name to the JSON name-value pair in the Assign path to variable field.

-

In the Default Value (optional) field, assign a default value to the variable.

The default value itself can be a variable. Type two curly brackets {{ and select from the available options.

-

Click Assign.

The JSON path and variable are added to the table of return values.

-

Repeat. Select another JSON name-value pair.

You can update the table of return values at any time by assigning different variables to the JSON name-value pairs and changing the default values.